Detecting Web Attacks Using A Convolutional Neural Network

Introduction

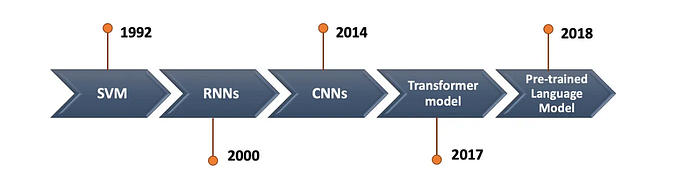

Today we’re going to talk about Convolutional Neural Networks and the way they can be used in the cyber security domain. As some of you may know, Machine Learning applications for cyber security have been getting pretty popular in the past few years. Many different types of models are used to detect varying types of attacks.

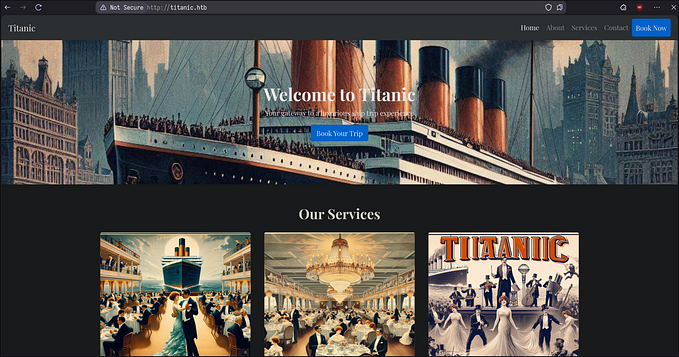

The specific attack vector we’ll be focusing on today is web exploitation. Since most apps nowadays are web apps, and since they are exposed to the world wide network, many cyber attacks are targeted at exploiting the public facing web applications. Some examples of web exploitation techniques are attacks such as SQL injection, where an attacker tries to exploit the input of the web page such that sensitive data may be queried or changed by injecting SQL into certain areas of input.

In the example above, a specific crafted URL query is being sent to a web server in order to exploit their input and query sensitive data.

Another type of web exploitation technique is XSS (cross site scripting) which is an attack that enables attackers to inject client-side scripts into web pages viewed by other users.

In the example above, an HTML tag is passed into the URL as the attacker is trying to send a string containing HTML that will later be parsed by another client and code may be executed on his workstation.

I propose using a machine learning model to detect these attacks. I’ll train the model on a dataset found on github. The dataset contains samples of web attacks. It contains queries which are not malicious and some which are, I highly recommend checking it out.

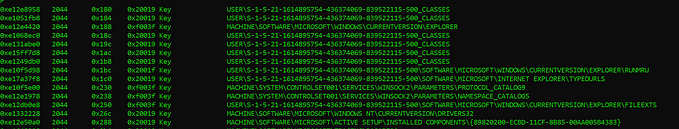

As you can see below, here is an example of some queries in the dataset

Essentially these are all just URLs directed at some web application.

The model we will use is a Convolutional Neural Network to detect the malicious requests.

Why Convolutional Neural Networks?

CNN’s are often used in the vision domain. An example of its usage is in the classical MNIST problem where its used to classify handwritten digits.

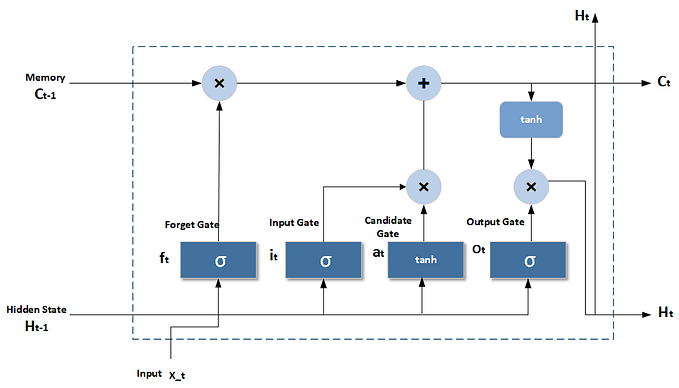

But the obvious question that comes to mind is how these neural networks can be used to classify if a URL has a malicious intent? It is a very good question and its weird because images are essentially matrixes that contain cell values between 0–255 which vary the degrees of black-white. These values are then inputted into a complex function ( neural network ) which outputs the relevant information about the image. For example, in the MNIST problem the neural network would have to know how to classify digits, so an image is given as input and a digit would be given as output. For a more in depth explanation of how Convolutional Neural Networks work, check out this article: https://towardsdatascience.com/convolutional-neural-networks-explained-9cc5188c4939

The question still remains, how do we encode the data of a URL into data that a convolutional neural network can work with?

Essentially what we are going to do is use the Unicode encoding of each character (the number in the Unicode table that represents the character). This is going to be part of our preprocessing as the neural network does not really understand words, rather, it is a function that understands mathematical objects such as numbers, vectors and matrixes. The neural network then learns the features necessary to differentiate between malicious and non malicious queries based on this encoding.

This is a much better option in comparison to other encoding types such as character embedding, word embedding and obviously one hot encoding. The reason that it’s better is that its very efficient in storage. A normal character embedding will usually encode a character into some vector which is already more costly than just embedding a character in its Unicode numerical representation. Not to even mention word embeddings which are much more difficult in this situation because we are dealing with URLs, not sentences.

We’ll format the encoded queries into 28x28 matrixes so it will be easier to use with our pre-existing MNIST neural network (Yes we’re taking almost the same architecture).

from keras.models import Sequential,Modelfrom keras.layers import Dense, Conv2D, Flatten, MaxPool2Dmodel = Sequential()model.add(layers.Input(shape=(28,28,1)))model.add(Conv2D(64, (5, 5), activation='relu', kernel_initializer='he_uniform'))model.add(layers.BatchNormalization())model.add(Conv2D(32, (5, 5), activation='relu'))model.add(layers.Dropout(0.2))model.add(MaxPool2D((3,3)))model.add(Flatten())model.add(Dense(100, activation='relu', kernel_initializer='he_uniform'))model.add(Dense(2, activation='softmax'))# compile modelmodel.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy',metrics.Recall(),metrics.Precision()])

Finally, the preprocessing for the dataset we’ll use the generate dataset function I wrote which gets a dataset of raw commands and labels and encodes everything into UNICODE and attaches labels.

def generate_dataset(df):tensorlist = []labelist = []# Generating our dataset sample by samplefor row in df.values:query = row[0]label = row[1]# Encode characters into their UNICODE valueurl_part = [ord(x) if (ord(x) < 129) else 0 for x in query[:784]]# Pad with zeroes the remaining data in order for us to have a full 28*28 image.url_part += [0] * (784 - len(url_part))maxim = max(url_part)if maxim > maxabs:maxabs = maximx = np.array(url_part).reshape(28,28)# label yif label == 1:y = np.array([0, 1], dtype=np.int8)else :y = np.array([1, 0], dtype=np.int8)tensorlist.append(x)labelist.append(y)return tensorlist,labelist

Finally, now that we have our dataset and everything is ready it should be about time to train and test the model.

As you can see in just about 2 epochs the neural network is already performing incredible.

Check out the code on github: