Fundamentals of SIEM

This blog is for those people who are interested in working on any Siem tool.

What is SIEM?

SIEM stands for security information and event management. SIEM software combines security information management (SIM) and security event management (SEM) to provide real time analysis of logs and the security alerts being generated by network hardware. There are a lot of logs generated every now and then in an organisation, a SIEM tool makes that easy as it has the functionalities to correlate, transfer, parse, aggregate, store, alert etc. SIEM software provides organisations with analytics, responses, dashboards, modification of logs, correlations, alerts etc. With SIEM we can create rules which the software will match with the recurring events. We can create rules to prevent advanced threats by APT (Advance Persistent Threat) groups by using information from resources like MITRE.

Working of SIEM

SIEM software starts by collecting logs from various sources like networks, applications, security devices, antivirus logs, other devices connected to the internal network etc and then categorise it. Now, the creation of rules part come in and we need to create the rules for specific threats. For example : There is an IP address which is running a web vulnerability scan(using OpenVas or any other scanner) on one of our subdomain then we will create a rule to generate an alert against such IP’s and whenever any such event will occur SIEM will generate an alert. By using SIEM we can improve our efficiency of investigation.

Logs are collected in 2 ways :

- Log Agents

- Agentless

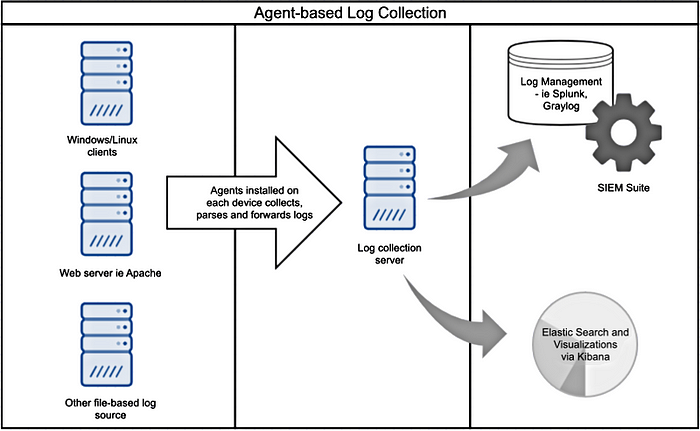

Log Agents

In simple words, log agent is a software that is installed on all the nodes from where the logs need to be collected. These softwares have parsing, log rotation, buffering, encryption etc features. We will learn each of these terms ahead. The logs can be modified here before sending to the destination. For example : if there is a log of account login which has “username” = “mike”; “password” = “172#prod@123” then we can divide it in two parts and forward it as ->

Message 1 : “username” = “mike”

Message 2 : “password” = “1723prod@123”

Syslog -> Syslog is a network protocol to transfer logs. TLS can be used to encrypt the log. If we want to transfer the log with syslog then we need to parse it in the syslog format.

Ideal syslog format :

Timestamp-Source Device-Facility-Severity-Message Number-Message Text

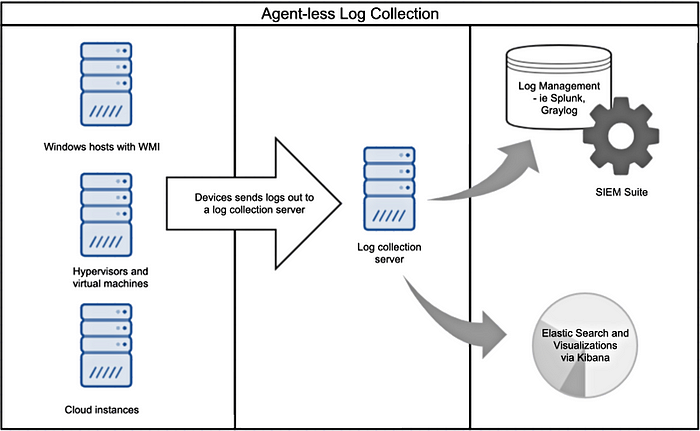

Agentless

Agentless log sending process is when the client prefers not to install any software to collect the logs and send the logs via SSH. With this method we need to provide login credentials which is dangerous.

Log Parsing

Log parsing is a very important tool that SIEM uses to extract data elements from a raw log. Parsing allows us to correlate data from various sources to conduct analysis of incidents.

Log Aggregation

Log aggregation is a place where all the generated logs are sent to. Here we can modify the logs based on what we want to send to the destination. For example : If there is a log generated from a web server that has all the details of user agent, file, method etc and we just need to send the code(404,200 etc) then we can parse it and send only the desired part to the destination.

Log Aggregator process

Before sending the log to the final destination the logs need to be processed. Processing the logs by 3 ways :

- Parsing

- Enchrichment : It can be done to achieve higher efficiency while investigation. For example : we can add the geolocation as we know the IP address from the log.

- Filteration : Sometimes the log needs to be modified or filtered before sending it to the destination. There can be some errors like : Suppose the time of the log isn’t in the right format then what we can do is modify it in the right format.

EPS

EPS is events per second. EPS has a directly proportionate to aggregation and storage. If EPS is high then the aggregation and storage will be high as well. Now, we need to have multiple aggregators if the EPS is high as if for every log there is only one aggregator used then that will load the aggregator too much. This process is known as scaling the aggregator.

Storage of the logs

Once the logs are reached to the destination they need to be stored and the we need a huge storage space to store logs. The storage should be huge enough, and efficient too. The speed for accessing the data should be really fast in order to increase efficiency.

We can also use write once read many (worm) technology as we already did the modification part above.

Alerts

Now that we have the logs processed and stored successfully we need to create rules to detect unusual activity over our network, devices, web pages. Suppose there was a log created today then we need the alerts for it within a strict timeline. What point will it make if we get the alert after 2–3 days?Nothing! So, fast indexing capability is a must.

Examples to create a rule that will trigger an alert :

- Suppose, there is a new user added with high level permissions in the main admin.

- Suppose, there is a login web page and someone is repeatedly clicking reset password like 15 times.

Techniques

- Blacklist : In this technique we create a list of all the possible malicious situations. Example -> We can use open source tools to collect and write down all the processes that are malicious. If that process gets caught in the logs then an alert will be triggered. We can also create a list of all the banned IP’s.

- Whitelist : In this technique we create a list of all the possible normal situations in which there should be no alerts. But we need to update this list regularly.

Thanks for reading. Feel free to ask questions in comments and share this writeup if you found it useful.