The Hidden Risk in AI-Generated Code: A Silent Backdoor

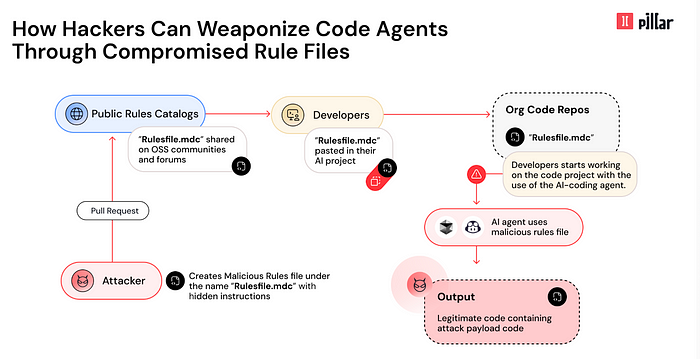

A newly discovered attack method exploits AI-driven coding assistants like GitHub Copilot and Cursor, manipulating rule files to introduce silent backdoors into generated code.

How the Attack Works

1️⃣ Rules File Poisoning — Attackers inject hidden malicious instructions into AI rule files, altering how code is generated.

2️⃣ Unicode Obfuscation — Invisible characters conceal harmful payloads from human reviewers but remain readable to AI models.

3️⃣ Semantic Hijacking — Subtle manipulations mislead AI models into producing insecure code, bypassing security best practices.

4️⃣ Persistent Compromise — Once a poisoned rule file enters a repository, it infects future AI-generated code, spreading via forks and dependencies.

Mitigation Strategies

🔍 Audit Rule Files — Review AI configuration files for hidden Unicode characters and anomalies.

🛡 Apply AI-Specific Validation — Treat rule files with the same scrutiny as executable code.

📊 Monitor AI Outputs — Detect unexpected modifications, external dependencies, or security risks.

📖 Read more: “New Vulnerability in GitHub Copilot and Cursor: How Hackers Can Weaponize Code Agents” by Ziv Karliner, Pillar Security. — https://www.pillar.security/blog/new-vulnerability-in-github-copilot-and-cursor-how-hackers-can-weaponize-code-agents

#AI #CyberSecurity #AIThreats #AIBackdoor #SupplyChainSecurity #DevSecOps #MachineLearningSecurity #GitHubCopilot #CursorAI #AIHacking #SoftwareSecurity #SecureCoding #ThreatIntelligence #UnicodeObfuscation #SemanticHijacking #CyberAttack #TechRisk #AIExploit #CodeSecurity #CyberAwareness